Happy 1 year CARLA: The expected CARLA 0.9.1 release is finally among us!

It has been a long journey for the CARLA simulator. The team started this adventure back at the end of 2016 aiming to bring a state-of-the-art Autonomous Driving simulator to the open source community. Our goals were clear: democratising Autonomous Driving via accessible simulation and creating a platform that enables academics and industry members to share knowledge and results in the open. In November of 2017 we made the first public release of CARLA. At that time, the platform was a humble simulator built around the use cases of camera-based policy learning and data acquisition tasks. A great starting point but not enough. Therefore, we decided to completely redesign the platform to produce a more advanced and flexible autonomous driving simulator, with the following objectives in mind:

- Users must be able to create new contents (aka maps, levels) in a simple fashion

- Users must be able to create complex traffic scenarios (aka traffic situations)

- Users must be able to leverage any existing sensor used in autonomous driving

- Users must be able to automatically train and assess their driving agents (driving stacks)

- The platform must be scalable, i.e. computation is distributed among different nodes

We have dedicated the past 10 months to redesign the platform to achieve these objectives. The 0.9.0 release was an initial showcase of this effort, with the introduction of the new CARLA API, the multi-client architecture and the capacity of controlling all vehicles of the simulation at will.

In this 0.9.1 release, we have added critical features to enable the ingestion of new contents (maps) made with external tools and the simple navigation of these maps using a new API based on waypoints and map queries. To this end, we have adopted ASAM OpenDRIVE as the core of CARLA’s road network format. Our map representation is built on top of OpenDRIVE, allowing for a easy-to-use and versatile navigation API. We also introduce Town03, a complex urban scene with multi-lane roads, tunnels, roundabouts and many other interesting features, as an example of a map generated semi-automatically from an external tool.

Now let’s dive in the new features of this release, and please beware this is still a development release!

Driving simulation messages

All the driving messages produced by the server in the 0.8.X branch have been incorporated back as sensors to CARLA 0.9.1. This enables the client to detect collisions and determine lane changes. Collisions against the static layout of the scene and dynamic objects like vehicles are detected by the new sensor.other.collision.

class CollisionSensor(object):

"""

Encapsulate sensor.other.collision messages

"""

def __init__(self, parent_actor):

self._parent = parent_actor

self._history = collections.defaultdict(int)

bp = world.get_blueprint_library().find('sensor.other.collision')

self._sensor = world.spawn_actor(bp, carla.Transform(), attach_to=self._parent)

# We need to pass the lambda a weak reference to self to avoid circular

# reference.

weak_self = weakref.ref(self)

self.sensor.listen(lambda event: CollisionSensor._on_collision(weak_self, event))

@staticmethod

def _on_collision(weak_self, event):

self = weak_self()

if not self:

return

actor_type = ' '.join(event.other_actor.type_id.replace('_', '.').title().split('.')[1:])

self._hud.notification('Collision with %r' % actor_type)

impulse = event.normal_impulse

intensity = math.sqrt(impulse.x**2 + impulse.y**2 + impulse.z**2)

self._history.append((event.frame_number, intensity))

...

In this example we will receive a message each time the parent_actor (a vehicle) crashes against other actor of the scene, indicating its type and the magnitude of the impact. This sensor also reports collisions against static elements of the map, such as traffic signs, walls, traffic lights, sidewalks, etc.

A new sensor sensor.other.lane_detector can be now used to detect lane changes. New CARLA maps are more complex, containing multi-lane roads. In this context, it is important to detect when vehicles are changing lanes, determining which type of lanemarking the vehicle has crossed and what is the direction of the target lane. See code example below:

class LaneInvasionSensor(object):

def __init__(self, parent_actor, hud):

self.sensor = None

self._parent = parent_actor

self._hud = hud

world = self._parent.get_world()

bp = world.get_blueprint_library().find('sensor.other.lane_detector')

self.sensor = world.spawn_actor(bp, carla.Transform(), attach_to=self._parent)

# We need to pass the lambda a weak reference to self to avoid circular

# reference.

weak_self = weakref.ref(self)

self.sensor.listen(lambda event: LaneInvasionSensor._on_invasion(weak_self, event))

@staticmethod

def _on_invasion(weak_self, event):

self = weak_self()

if not self:

return

text = ['%r' % str(x).split()[-1] for x in set(event.crossed_lane_markings)]

self._hud.notification('Crossed line %s' % ' and '.join(text))

Map and Waypoint representations

It is possible now to get access to a high-level representation of the road network via the Map class. Map objects let us get recommended spawning points for vehicles (places where it is safe to instantiate new objects):

map.get_spawn_points()

It also makes driving from client side very easy through the new waypoint querying API. In the code below we obtain the waypoint associated to the current location of an actor.

w = map.get_waypoint(location)

Users can also generate waypoints up to a given distance:

map.generate_waypoints(distance)

And overall, users can make use of these new functions to create their own navigation algorithms:

client = carla.Client(args.host, args.port)

client.set_timeout(2.0)

hud = HUD(args.width, args.height)

world = World(client.get_world(), hud)

world.vehicle.set_simulate_physics(False)

m = world.world.get_map()

w = m.get_waypoint(world.vehicle.get_location())

clock = pygame.time.Clock()

count = 0

while True:

clock.tick_busy_loop(60)

world.tick(clock)

world.render(display)

pygame.display.flip()

if count % 10 == 0:

nexts = list(w.next(1.0))

print('Next(1.0) --> %d waypoints' % len(nexts))

if not nexts:

raise RuntimeError("No more waypoints!")

w = random.choice(nexts)

text = "road id = %d, lane id = %d, transform = %s"

print(text % (w.road_id, w.lane_id, w.transform))

if count % 40 == 0:

draw_waypoints(world.world, w)

count = 0

t = w.transform

world.vehicle.set_transform(t)

count += 1

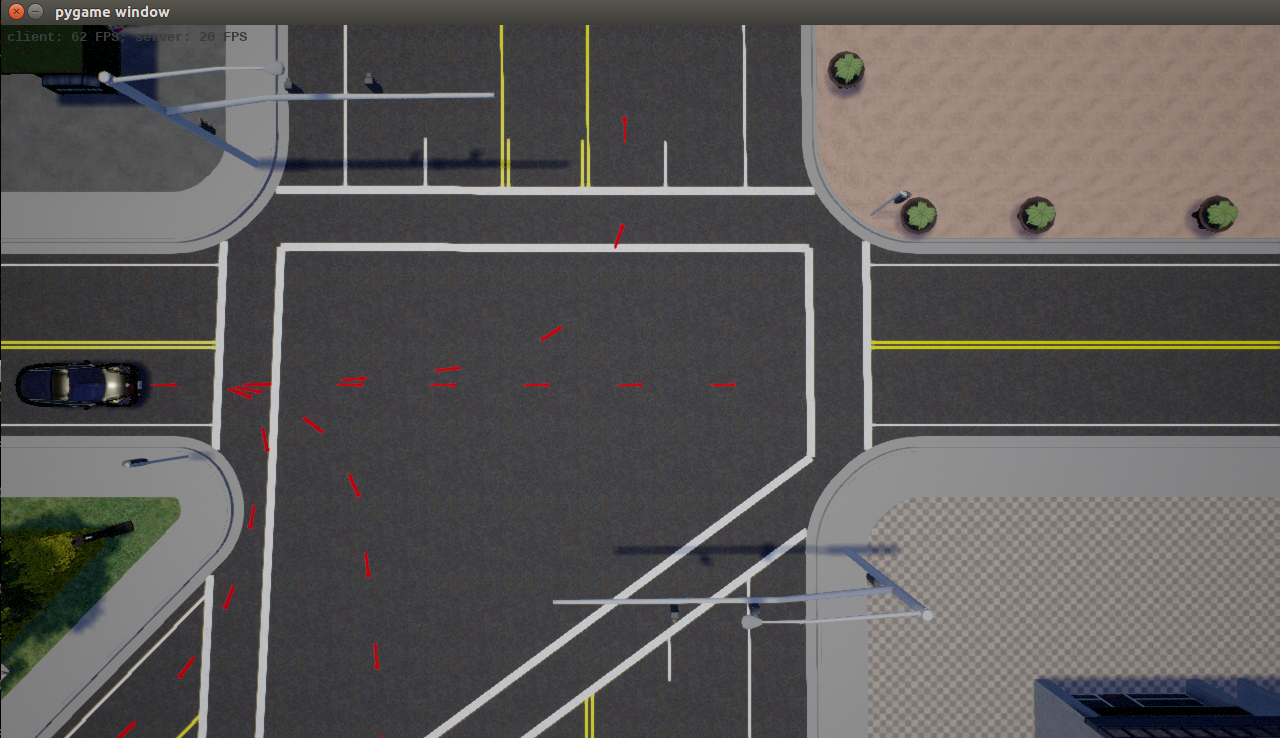

Figure 1. Waypoints generated on-the-fly using the new Waypoint class

Pipeline for semi-automatic map generation

One of the most exciting additions of this release is the compatibility with new maps created with external tools. We want users to easily create their own maps in CARLA. Towards this end we adopted OpenDRIVE as our map definition standard. Using a well-known standard reduces the effort needed to create new contents compatible with the CARLA simulator.

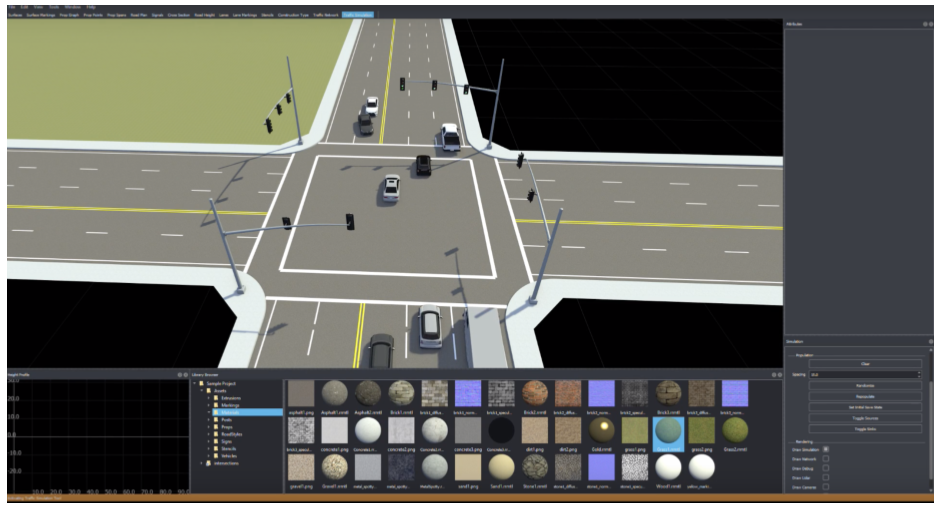

We have been collaborating closely with VectorZero to guarantee full compatibility between RoadRunner and CARLA. RoadRunner is a tool that can produce complex driving maps in a few clicks thanks to its powerful procedural generation engine. What is best, it also produces the OpenDRIVE file associated to the 3D map, so maps produced by RoadRunner can be directly used in CARLA with all the functionalities available for the current towns.

VectorZero has commited to give free licenses to use RoadRunner for academic and research purposes to those academics who request it. So, check out their website to obtain a license and start building your own maps!

Figure 2. Example of RoadRunner scene

Things missing

- Full synchronous mode

- Simulation of pedestrians

- Fully functional ROS bridge

These functionalities will be added very soon. We will keep improving this API in the coming releases. If you find any issues or have suggestions that can be added, please do not hesitate to share it with the community at our GitHub or Discord chat. For the full list of methods available take a look at the Python API Reference.

Big thanks to all our supporters and sponsors for making this project a reality. And happy 1 year CARLA!

Full list of changes

- New Town03 generated using RoadRunner from VectorZero

- Python API enhancements

- Support for Python 3

- Support for retrieving and changing lighting and weather conditions

- Lidar sensor support

- Image converter methods support: Depth, LogarithmicDepth, and CityScapesPalette

- IO methods support for sensor data, “save_to_disk” available for PNG, JPEG, TIFF, and PLY

- Added collision event sensor, “sensor.other.collision”, that triggers a callback on each collision to the actor it is attached to

- Added lane detector sensor, “sensor.other.lane_detector”, that detects lane invasion events

- Added

carla.Mapandcarla.Waypointclasses for querying info about the road layout- Added methods for converting and saving the map as OpenDRIVE format

- Added

map.get_spawn_points()to retrieve the recommended spawn points for vehicles - Added

map.get_waypoint(location)to query the nearest waypoint - Added

map.generate_waypoints(distance)to generate waypoints all over the map at an approximated distance - Added

map.get_topology()for getting a list with tuples of waypoints defining the edges of the road graph - Added

waypoint.next(distance)to retrieve the list of the waypoints at a certain distance that can be reached from a given waypoint

- Added bounding box attributes to vehicles,

vehicle.bounding_box - Added

parentattributes to actors, not None if the actor is attached to another actor - Added function to enable/disable simulating physics on an actor,

actor.set_simulate_physics(enabled=True) - Added support for requesting the list of all actors alive in the current world,

world.get_actors() -

world.get_actors()returns anActorListobject withfilterfunctionality and lazy initialization of actors - Added

semantic_tagsto actors containing the list of tags of all of its components (these tags match the tags retrieved by the semantic segmentation sensor) - Exposed last control applied to vehicles,

vehicle.get_vehicle_control() - Added a “tick” message containing info of all the actors in the scene

- Executed in the background and cached

- Added

world.wait_for_tick()for blocking the current thread until a “tick” message is received - Added

world.on_tick(callback)for executing a callback asynchronously each time a “tick” message is received. These methods return/pass acarla.Timestampobject containing, frame count, delta time of last tick, global simulation time, and OS timestamp - Methods retrieving actor’s info, e.g.

actor.get_transform(), don’t need to connect with the simulator, which makes these calls quite cheap

- Allow drawing debug shapes from Python: points, lines, arrows, boxes, and strings (

world.debug.draw_*) - Added id (id of current episode) and map name to

carla.World - Exposed traffic lights and traffic signs as actors. Traffic lights have a specialized actor class that exposes the traffic light state (red, green, yellow) as a property

- Added methods for accessing and modifying individual items in

carla.Image(pixels) andcarla.LidarMeasurement(locations) - Added

carla.Vector3Dfor (x, y, z) objects that are not acarla.Location - Removed

client.ping()and addedclient.get_server_version(), which accomplishes the same purpose - Renamed

contains_X()methods tohas_X() - Changed timeout to use seconds (float)

- Allow iterations over attributes of an Actor’s Blueprint

- Fixed wildcard filtering issues, now “vehicle.” or “bmw*” patterns work too!

- Fixed

actor.set_transform()broken for attached actors

- More Python example scripts and improved the present ones

- Now all the scripts use the list of recommended spawn points for each map

- Renamed “example.py” to “tutorial.py”, and updated it with latest changes in API

- Added timeout to the examples

- “manual_control.py” performance has been improved while having more measurements

- “manual_control.py” now has options to change camera type and position

- “manual_control.py” now has options to iterate weather presets

- “manual_control.py” now has a fancier HUD with lots of info, and F1 key binding to remove it

- Added “dynamic_weather.py” to change the weather in real-time (the one used in the video)

- Added “spawn_npc.py” to quickly add a lot of NPC vehicles to the simulator

- Added “spawn_npc.py –safe” to only add non-problematic vehicles

- “vehicle_gallery.py” also got some small fixes

- Asset and content improvements

- Improved control of bikes and motorbikes, still not perfect but causes less accidents

- Adjusted the maximum distance culling for foliage

- Adjusted pedestrian animations and scale issues (not yet available with new API though)

- Adjusted vehicle physics and center of mass

- Fixed filenames too long when packing the project on Windows

- Fixed “SplineMeshRepeater” loses its collider mesh from time to time

- New system for road information based on OpenDRIVE format

- Added new map classes for querying info about the road layout and topology

- Added methods for finding closest point on the road

- Added methods for generating and iterating waypoints based on the road layout

- Added OpenDRIVE parser to convert OpenDRIVE files to our map data structures

- Other miscellaneous improvements and fixes

- Fixed single channel Lidar crash (by @cwecht)

- Fixed command-line argument

-carla-settingsfails to load absolute paths (by @harlowja) - Added an option to command-line to change quality level when launching the simulator,

-quality-level=Low - Added ROS bridge odometry message (by @ShepelIlya)

- New Docker tutorial

- Disabled texture streaming to avoid issue of textures not loading in scene captures

- Adjusted scene capture camera gamma to 2.4

- Fixed leaking objects in simulation when unspawning a vehicle. Now Pawn’s controller is destroyed too if necessary when destroying an Actor

- Fixed overflow on platform time-stamp. Now it uses

double - Upgraded @rpclib to fix crash when client exits too fast (rpclib/PR#167)

- Moved “PythonClient” inside deprecated folder to avoid confusion

- Refactored sensor related code

- New plugin system for sensors that simplifies adding sensors, mini-tutorial at #830

- Compile-time dispatcher for sensors and serializers

- Improvements to the streaming library

- Added multi-streams for streaming simultaneously to multiple clients (used by the “tick” message)

- Messages re-use allocated memory when possible

- Allows unsubscribing from a stream

- Fixed client receives interleaved sensor messages, some messages can be discarded if connection is too slow, though

- Fixed streaming client fails to connect in Windows

- Fixed streaming client keeps trying to reconnect after destroying a sensor

- Refactored client C++ API

- Python GIL is released whenever possible to avoid blocking

- Fixed deadlock when closing the simulator while a client is connected

- Fixed crash on simulator shutdown if a client has connected at some point

- Set methods are now sent async which greatly improves performance in the client-side

- Vehicle control is cached and not sent if it has not changed

- Suppressed exceptions in destructors

- Other development improvements

- Improved Linux Makefile, fine-grained targets to reduce compilation times in development

- Workaround for “setup.py” to link against “libcarla_client.a” again (Linux only)

- Added support for “.gtest” file, each line of this file is passed to GTest executables as arguments when running

make checktargets - Python eggs are also archived on Jenkins to easily get them without downloading the full package

- Added uncrustify config file for formatting UE4 C++ code