Closing the summer on a high note, the long-awaited CARLA 0.9.10 is here! Get comfortable. This is going to be an exciting trip.

CARLA 0.9.10 comes with the trunk packed of improvements, with notable dedication to sensors. The LIDAR sensor been upgraded, and a brand-new semantic LIDAR sensor is here to provide with much more information of the surroundings. We are working to improve the rendering pipeline for cameras and, on the meantime, some changes in the sky atmosphere and RGB cameras have been made for those renders to look even better.

This idea of renovation extends to other CARLA modules too. The architecture of Traffic Manager has been thoroughly revisited to improve the performance, and ensure consistency. It is now deserving of the name TM 2.0. The integration with Autoware has been improved and eased, facilitating an agent that can be used out-of-the-box. These improvements come along with the first integration of CARLA-ROS bridge with ROS2, to keep up with the new generation of ROS. Finally, the pedestrian gallery is going under renovation too. We want to provide with a more varied and realistic set of walkers that make the simulation feel alive.

There are also some worth-mentioning additions. The integration with OpenStreetMap (still experimental), allows users to generate CARLA maps based on the road definitions in OpenStreetMap, an open-license world map. A plug-in repository has been created for contributors to share their work, and it already comes with a remarkable contribution. The carlaviz plug-in allows for web browser visualization of the simulation. It has been developed and maintained by mjxu96. We would like to take some extra time to thank all the external contributors that were part of this release. We are grateful for their work, and all their names will figure both in this post, and the release video.

Last, but not least, we would also like to announce that… the CARLA AD Leaderboard is finally open to the public! Test the driving proficiency of your AD agent, and share the results with the rest of the world. The announcement video can be found by the end of this post. Find out more in the CARLA AD Leaderboard website!

Here is a recap of all the novelties in CARLA 0.9.10!

- LIDAR sensor upgrade — Better performance, more detailed representation of the world, and additional parameterization for a more realistic behavior of the sensor.

- Semantic LIDAR sensor — A new LIDAR sensor providing all the available data per point, and allowing semantic and instance segmentation of its surroundings.

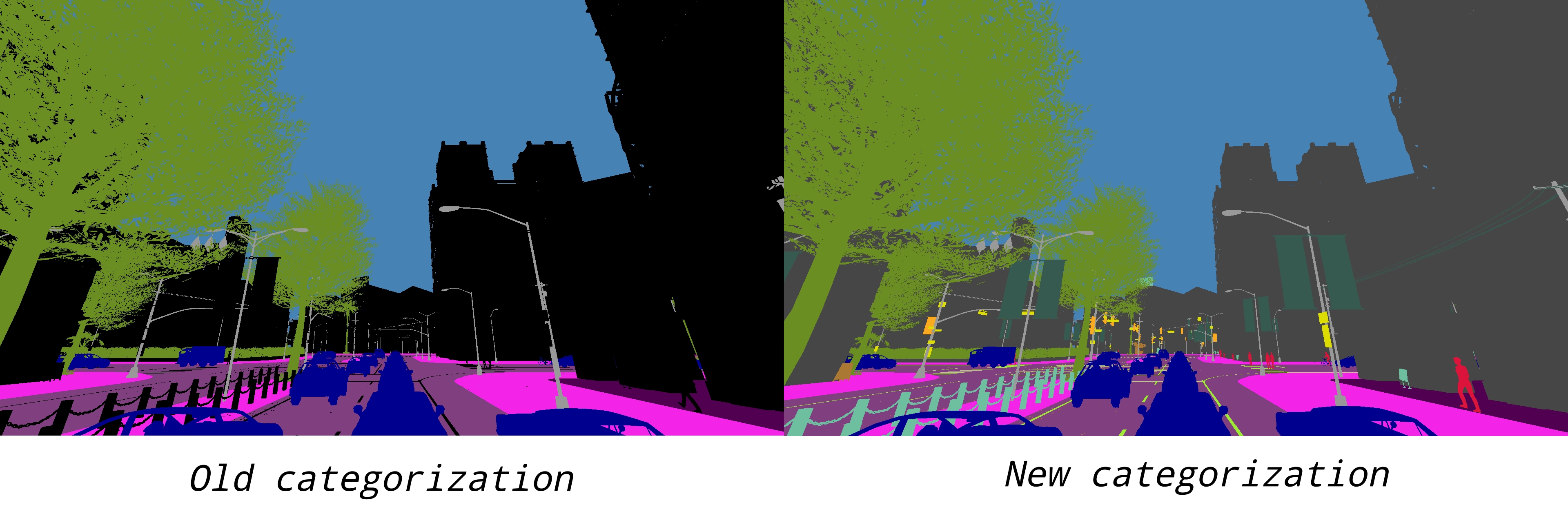

- Extended semantic segmentation — More precise categorization for a better recognition of the surroundings.

- OpenStreetMap integration (experimental) — A new feature to generate CARLA maps based on an open-license world map.

- Global bounding box accessibility — The carla.World class has access to the bounding boxes of all the elements in the scene.

- Enhanced vehicle physics — Changes in the core physics result in stable turns, sensible responses to collisions, and more realistic suspension systems.

- Plug-in repository — A space for CARLA plug-ins and add-ons that are developed and maintained by external contributors.

- carlaviz plug-in — New plug-in that allows for visualization of the simulation in a web browser. A contribution made by mjxu96.

- Traffic Manager 2.0 — A remodelled architecture that improves performance, and fixes possible frame delays when commands are applied.

- ROS2 integration — The CARLA-ROS bridge now provides support for ROS2, the first step to achieve full integration with the next generation of ROS.

- Autoware integration improvements — Now providing with an Autoware image with all the content and configuration needed, and an Autoware agent ready to be used.

- New RSS features — Integration of the new features in ad-rss-lib 4.0.x, which include pedestrians, and unstructured scenarios.

- Units of measurement in Python API docs — The Python API now includes the units of measurement for variables, parameters and method returns.

- Pedestrian gallery extension — The first iteration on a great upgrade of the pedestrians available in CARLA. So far, it features new models with much more detailed facial features and clothing.

- New sky atmosphere — Adjustments in the sun light values for a more realistic ambience in the simulation.

- Eye adaptation for RGB cameras — RGB cameras by default will automatically adjust the exposure values according to the scene luminance.

- Contributors — A space to thank the external contributions since the release of CARLA 0.9.9.

- Changelog — A summary of the additions and fixes featured in this release.

- CARLA AD Leaderboard Announcement — Watch the video introducing the recently opened CARLA AD Leaderboard.

Let’s take a look!

LIDAR sensor upgrade

First and foremost, the performance of the LIDAR sensor has been greatly improved. This has been achieved while also providing with new colliders for the elements in the scene. The sensor representation of the world is now more detailed, and the elements in it are described much more accurately.

Some new attributes have been added to the LIDAR parameterization. These make the sensor and the point cloud behave in a more realistic manner.

-

Intensity — The

raw_dataretrieved by the LIDAR sensor is now a 4D array of points. The fourth dimension contains an approximation of the intensity of the received ray. The intensity is reduced over time as the ray travels according to the formula:

intensity / original_intensity = e * (-attenuation_coef * distance)

The coefficient of attenuation may depend on the sensor’s wavelenght and the conditions of the atmosphere. It can be modified with the LIDAR attribute: atmosphere_attenuation_rate.

-

Drop-off — In real sensors, some cloud points can be loss due to multiple reasons like perturbations on the atmosphere or sensor errors. We simulate these with two different models.

-

General drop-off — Proportion of points that we drop-off randomly. This is done before the tracing, meaning the points being dropped are not calculated, and therefore performance is increased. If

dropoff_general_rate = 0.5, half of the points will be dropped. -

Instensity-based drop-off — For each point detected, and extra drop-off is calculated with a probability based in the computed intensity. This probability is determined by two parameters.

dropoff_zero_intensityis the probability of points with zero intensity to be dropped.dropoff_intensity_limitis a threshold intensity above which no points will be dropped. The probability of a point within the range to be dropped is a linear proportion based on these two parameters.

-

General drop-off — Proportion of points that we drop-off randomly. This is done before the tracing, meaning the points being dropped are not calculated, and therefore performance is increased. If

-

Noise — The

noise_stddevattribute makes for a noise model to simulate unexpected deviations that appear in real-life sensors. If the noise is positive, the location of each point will be randomly perturbed along the vector of the ray detecting it.

Semantic LIDAR sensor

This sensor simulates a rotating LIDAR implemented using ray-casting that exposes all the information about the raycast hit. Its behaviour is quite similar to the LIDAR sensor, but there are two main differences between them.

- The raw data retrieved by the semantic LIDAR includes more data per point.

- Coordinates of the point (as the normal LIDAR does).

- The cosine between the angle of incidence and the normal of the surface hit.

- Instance and semantic ground-truth. Basically the index of the CARLA object hit, and its semantic tag.

- The semantic LIDAR does not include neither intensity, drop-off nor noise model attributes.

The capabilities of this sensor are remarkable. A simple visualization of the the Semantic LIDAR data provides with a much clear view of the surroundings.

Extended semantic segmentation

The semantic ground-truth has been extended to recognize a wider range of categories. Now, the data retrieved by semantic segmentation sensors will be more precise, as many elements in the scene that were previously undetected, can be easily distinguished.

Here is a list of the categories currently available. Previously existing ones are marked in grey.

- Bridge — Only the structure of the bridge. Fences, people, vehicles, an other elements on top of it are labeled separately.

- Building — Buildings like houses, skyscrapers,… and the elements attached to them. E.g. air conditioners, scaffolding, awning or ladders and much more.

- Dynamic — Other elements whose position is susceptible to change over time. E.g. Movable trash bins, buggies, bags, wheelchairs, animals, etc.

- Fence — Barriers, railing, or other upright structures. Basically wood or wire assemblies that enclose an area of ground.

- Ground — Any horizontal ground-level structures that does not match any other category. For example areas shared by vehicles and pedestrians, or flat roundabouts delimited from the road by a curb.

- GuardRail — All types of guard rails/crash barriers.

- Other — Everything that does not belong to any other category.

- Pedestrian — Humans that walk or ride/drive any kind of vehicle or mobility system. E.g. bicycles or scooters, skateboards, horses, roller-blades, wheel-chairs, etc.

- Pole — Small mainly vertically oriented pole. If the pole has a horizontal part (often for traffic light poles) this is also considered pole. E.g. sign pole, traffic light poles.

- RailTrack — All kind of rail tracks that are non-drivable by cars. E.g. subway and train rail tracks.

- Road — Part of ground on which cars usually drive. E.g. lanes in any directions, and streets.

- RoadLine — The markings on the road.

- Sidewalk — Part of ground designated for pedestrians or cyclists. Delimited from the road by some obstacle (such as curbs or poles), not only by markings. This label includes a possibly delimiting curb, traffic islands (the walkable part), and pedestrian zones.

- Sky — Open sky. Includes clouds and the sun.

- Static — Elements in the scene and props that are immovable. E.g. fire hydrants, fixed benches, fountains, bus stops, etc.

- Terrain — Grass, ground-level vegetation, soil or sand. These areas are not meant to be driven on. This label includes a possibly delimiting curb.

- TrafficLight — Traffic light boxes without their poles.

- TrafficSign — Signs installed by the state/city authority, usually for traffic regulation. This category does not include the poles where signs are attached to. E.g. traffic- signs, parking signs, direction signs…

- Unlabeled — Elements that have not been categorized are considered

Unlabeled. This category is meant to be empty or at least contain elements with no collisions. - Vegetation — Trees, hedges, all kinds of vertical vegetation. Ground-level vegetation is considered

Terrain. - Vehicles — Cars, vans, trucks, motorcycles, bikes, buses, trains…

- Wall — Individual standing walls. Not part of a building.

- Water — Horizontal water surfaces. E.g. Lakes, sea, rivers.

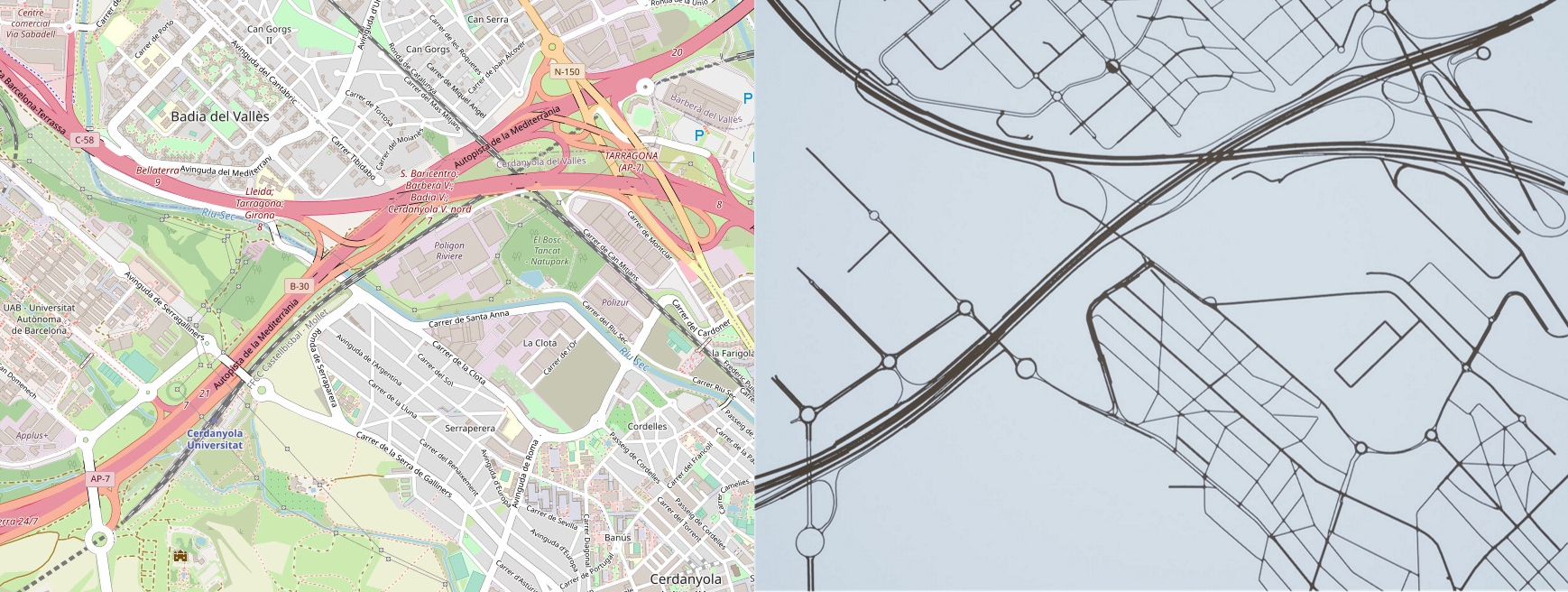

OpenStreetMap integration

OpenStreetMap is an open license map of the world developed by contributors. Sections of these map can be exported to an XML file in .osm format. CARLA can convert this file to OpenDRIVE format, and ingest it as any other OpenDRIVE map using the OpenDRIVE Standalone Mode. The process is detailed in the documentation, but here is a summary.

1. Obtain a map with OpenStreetMap — Go to OpenStreetMap, and export the XML file containing the map information.

2. Convert to OpenDRIVE format — To do the conversion from .osm to .xodr format, two classes have been added to the Python API.

-

carla.Osm2Odr – The class that does the conversion. It takes the content of the

.osmparsed as string, and returns a string containing the resulting.xodr.-

osm_file— The content of the initial.osmfile parsed as string. -

settings— A carla.Osm2OdrSettings object containing the settings for the conversion.

-

-

carla.Osm2OdrSettings – Helper class that contains different parameters used during the conversion.

-

use_offsets(default False) — Determines whereas the map should be generated with an offset, thus moving the origin from the center according to that offset. -

offset_x(default 0.0) — Offset in the X axis. -

offset_y(default 0.0) — Offset in the Y axis. -

default_lane_width(default 4.0) — Determines the width that lanes should have in the resulting XODR file. -

elevation_layer_height(default 0.0) — Determines the height separating elements in different layers, used for overlapping elements. Read more on layers for a better understanding of this.

-

3. Import into CARLA – The OpenDRIVE file can be automatically ingested in CARLA using the OpenDRIVE Standalone Mode. Use either a customized script or the config.py example provided in CARLA.

Here is an example of the feature at work. The image on the left belongs to the OpenStreetMap page. The image on the right is a fragment of that map area ingested in CARLA.

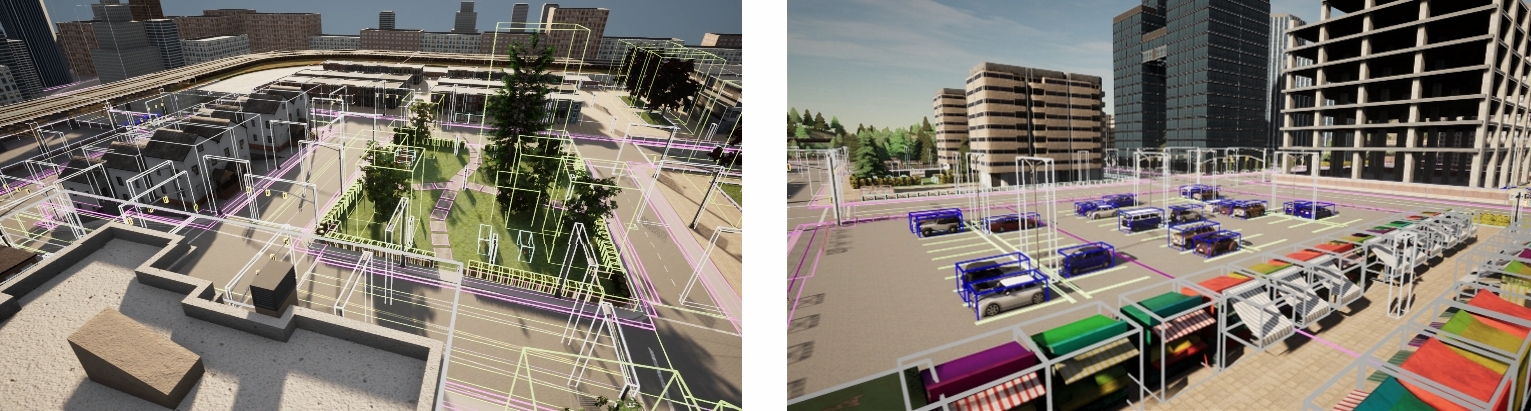

Global bounding box accessibility

Up until now in the Python API, only carla.Vehicle, carla.Walker, and carla.Junction had access to a bounding box containing their corresponding geometry. Now, the carla.World has access to the bounding boxes of all the elements in the scene. These are returned in an array of carla.BoundingBox. The query can be filtered using the enum class carla.CityObjectLabel. Note that the enum values in carla.CityObjectLabel are the same as the semantic segmentation categories.

# Create the arrays to store the bounding boxes

all_bbs = []

filtered_bbs = []

# Return the bounding boxes of all the elements in the scene

all_bbs = world.get_level_bbs()

# Return the bounding boxes of the buildings in the scene

filtered_bbs = world.get_level_bbs(carla.CityObjectLabel.Buildings)

Moreover, a carla.BoundingBox object now includes not only the extent and location of the box, but also its rotation.

Enhanced vehicle physics

We have worked on the vehicle physics so that their volumes are more accurate, and the core physics (such as center of mass, suspension, wheel’s friction…) are more realistic. The result is more noticeable whenever a vehicle turns or collides with another object. The balance of the vehicle is much greater now. The response to commands and collisions is no longer over-the-top, but restrained in favor of realism.

Moreover, there are some additions in carla.Actor that widen the range of physics directly applicable to vehicles.

-

carla.Actor.add_force(self,force) — Applies a force at the center of mass of the actor. This method should be used for forces that are applied over a certain period of time.

-

carla.Actor.add_torque(self,torque) — Applies a torque at the center of mass of the actor. This method should be used for torques that are applied over a certain period of time.

-

carla.Actor.enable_constant_velocity(self,velocity) — Sets a vehicle’s velocity vector (local space) to a constant value over time. The resulting velocity will be approximately the

velocitybeing set, as with carla.Actor.set_target_velocity(self,velocity). -

carla.Actor.disable_constant_velocity(self,velocity) — Disables any constant velocity previously set for a carla.Vehicle actor.

Plug-in repository

A new repository has been created purposely for external contributions. This is meant to be a space for contributors to develop and maintain their plug-ins and add-ons for CARLA.

Take the chance, and share your work in the plug-in repository! CARLA is grateful to all the contributors who dedicate their time to help the project grow. This is the perfect space to make your hard work visible for everybody.

carlaviz plug-in

This plug-in was created by the contributor mjxu96, and it already figures in the carla plug-ins repository. It creates a web browser window with some basic representation of the scene. Actors are updated on-the-fly, sensor data can be retrieved, and additional text, lines and polylines can be drawn in the scene.

There is detailed carlaviz documentation already available, but here is a brief summary on how to run the plug-in, and the output it provides.

1. Download carlaviz.

# Pull only the image that matches the CARLA package being used

docker pull mjxu96/carlaviz:0.9.6

docker pull mjxu96/carlaviz:0.9.7

docker pull mjxu96/carlaviz:0.9.8

docker pull mjxu96/carlaviz:0.9.9

# Pull this image if working on a CARLA build from source

docker pull carlasim/carlaviz:latest

2. Run CARLA.

3. Run carlaviz. In another terminal run the following command. Change <name_of_Docker_image> for the name of the image previously downloaded.

E.g. carlasim/carlaviz:latest or mjxu96/carlaviz:0.9.9.

docker run -it --network="host" -e CARLAVIZ_HOST_IP=localhost -e CARLA_SERVER_IP=localhost -e CARLA_SERVER_PORT=2000 <name_of_Docker_image>

4. Open the localhost carlaviz runs by default in port 8080. Open your web browser and go to http://127.0.0.1:8080/.

The plug-in shows a visualization window on the right. The scene is updated in real-time. A sidebar on the left side contains a list of items to be shown. Some of these items will appear in the visualization window, others (mainly sensor and game data) appear just above the item list. The result will look similar to the following.

Traffic Manager 2

For this iteration, the inner structure and logic of the Traffic Manager module has been revamped. These changes are explained in detail in the Traffic Manager documentation. Here is a brief summary of the principles that set the ground for the new architecture.

-

Data query centralization. The most impactful component in the new Traffic Manager 2.0 logic is the ALSM. It takes care of all the server calls necessary to get the current state of the simulation, and stores everything that will be needed further on: lists of vehicles and walkers, their position and velocity, static attributes such as bounding boxes, etc. Everything is queried by the ALSM and cached so that computational cost is reduced, and redundant API calls are avoided. Additionally, these will be used by different components, such as paths. Vehicle tracking is externalized in other components, so that there is no information dependency.

-

Per-vehicle loop structure. Previously in Traffic Manager, the calculations were divided in global stages. They were self-contained, which made it difficult to save up computational costs. Later in the pipeline, stages had no knowledge of the vehicle calculations done previously. Changing these global stages to a per-vehicle structure makes it easier to implement features such as parallellization, as the processing of stages can be triggered externally.

-

Loop control. This per-vehicle structure brings another issue: synchronization between vehicles has to be guaranteed. For said reason, a component is created to control the loop of the vehicle calculations. This controller creates synchronization barriers that force each vehicle to wait for the rest to finish their calculations. Once all the vehicles are done, the following stage is triggered. That ensures that all the vehicle calculations are done in sync, and avoids frame delays between the processing cycle and the commands being applied.

ROS2 integration

The CARLA-ROS bridge now provides support for the new generation of ROS. This integration is still a work in progress, and the current state can be followed in the corresponding ros2 branch.

Autoware integration improvements

The CARLA-Autoware bridge is now part of the official Autoware repository. The Autoware bridge relies on the CARLA ROS Bridge and its main objective is to establish the communication between the CARLA world and Autoware (mainly through ROS datatypes conversions).

The CARLA-Autoware repository contains an example of usage, with an Autoware agent ready to be used out-of-the-box. The agent is provided as a Docker image with all the necessary components already included. It features:

- Autoware 1.14.0.

- The Autoware content repository. This repository contains additional data required to run Autoware with CARLA, such as point cloud maps, vector maps, and some configuration files.

- CARLA ROS bridge.

The agent’s configuration, including sensor configuration, can be found here.

Executing the agent

1. Clone the carla-autoware repository.

git clone --recurse-submodules https://github.com/carla-simulator/carla-autoware

2. Build the docker image.

cd carla-autoware

./build.sh

3. Run a CARLA server. You can either run the CARLA server in your host machine or within a Docker container. Find out more here.

4. Run the carla-autoware image. This will start an interactive shell inside the container.

./run.sh

5. Run the agent.

roslaunch carla_autoware_agent carla_autoware_agent.launch town:=Town01

6. Select the desired destination. Use the 2D Nav Goal button in RVIZ. The output will be similar to the following.

New RSS features

The RSS sensor in CARLA now has full support for ad-rss-lib 4.0.x, which includes two main features.

- Unstructured roads — Scenarios were vehicles move in a route where no specific lanes are defined, or they are force to abandon these to avoid obstacles.

- Pedestrians — Moving in both structured and structured scenarios.

Find out more about these features either in the original RSS paper, or reading the rss-lib documentation on unstructured scenes, and behavior model for pedestrians.

Units of measurement in Python API docs

For the sake of clarity, the Python API docs now include the units of measurement used by parameters, variables and method returns. These appear inside the parenthesis, next to the type of the variable.

Pedestrian gallery extension

This release includes the first iteration on a major extension of the pedestrian gallery. In order to recreate reality more accurately, one of our main goals is to provide with a more diverse set of walkers. Moreover, great emphasis is being put in the details: meticulous models, attention to facial features, new shaders and materials for their skin, hair, eyes… In summary, we want to make them, and therefore the simulation, feel alive.

So far, there are three new models in the blueprint library, a sneak peek on what is to come. Take a look at the new additions!

New sky atmosphere

The light values of the scene (sun, streetlights, buildings, cars…) have been adjusted to values closer to reality. Due to these changes, the default values of the RGB camera sensor have been balanced accordingly, so now its parameterization is also more realistic.

Eye adaptation for RGB cameras

The default mode of the RGB cameras has changed to auto exposure histogram. In this mode, the exposure of the camera will be automatically adjusted depending on the lighting conditions. When changing from a dimly lit environment to a brightly lit one (or the other way around), the camera will adapt in a similar way the human eye does.

The eye adaptation can be disabled by changing the default value of the attribute exposure_mode from histogram to manual. This will allow to fix an exposure value that will not be affected by the luminance of the scene.

my_rgb_camera.set_attribute('exposure_mode','manual')

Contributors

We would like to dedicate this space to all of those whose contributions were merged in any of the project’s GitHub repositories during the development of CARLA 0.9.10. Thanks a lot for your hard work!

- arkadiy-telegin

- Bitfroest

- dennisrmaier

- Diego-ort

- eleurent

- elvircrn

- fgolemo

- Hakhyun-Kim

- hofbi

- ICGog

- ItsTimmy

- jbmag

- johschmitz

- kbu9299

- ll7

- patmalcolm91

- pedroburito

- PhDittmann

- s-hillerk

- simmranvermaa

- squizz617

- stonier

- umateusz

- Vaan5

- mjxu96

- yankagan

Changelog

- Added retrieval of bounding boxes for all the elements of the level

- Added deterministic mode for Traffic Manager

- Added support in Traffic Manager for dead-end roads

- Upgraded CARLA Docker image to Ubuntu 18.04

- Upgraded to AD RSS v4.1.0 supporting unstructured scenes and pedestrians, and fixed spdlog to v1.7.0

- Changed frozen behavior for traffic lights. It now affects to all traffic lights at the same time

- Added new pedestrian models

- API changes:

- Renamed

actor.set_velocity()toactor.set_target_velocity() - Renamed

actor.set_angular_velocity()toactor.set_target_velocity() - RGB cameras

exposure_modeis now set tohistogramby default

- Renamed

- API extensions:

- Added

carla.Osm2Odr.convert()function andcarla.Osm2OdrSettingsclass to support Open Street Maps to OpenDRIVE conversion - Added

world.freeze_all_traffic_lights()andtraffic_light.reset_group() - Added

client.stop_replayer()to stop the replayer - Added

world.get_vehicles_light_states()to get all the car light states at once - Added constant velocity mode (

actor.enable_constant_velocity()/actor.disable_constant_velocity()) - Added function

actor.add_angular_impulse()to add angular impulse to any actor - Added

actor.add_force()andactor.add_torque() - Added functions

transform.get_right_vector()andtransform.get_up_vector() - Added command to set multiple car light states at once

- Added 4-matrix form of transformations

- Added

- Added new semantic segmentation tags:

RailTrack,GuardRail,TrafficLight,Static,Dynamic,WaterandTerrain - Added fixed ids for street and building lights

- Added vehicle light and street light data to the recorder

- Improved the colliders and physics for all vehicles

- All sensors are now multi-stream, the same sensor can be listened from different clients

- New semantic LiDAR sensor (

lidar.ray_cast_semantic) - Added

open3D_lidar.py, a more friendly LiDAR visualizer - Added make command to download contributions as plugins (

make plugins) - Added a warning when using SpringArm exactly in the ‘z’ axis of the attached actor

- Improved performance of raycast-based sensors through parallelization

- Added an approximation of the intensity of each point of the cloud in the LiDAR sensor

- Added Dynamic Vision Sensor (DVS) camera based on ESIM simulation http://rpg.ifi.uzh.ch/esim.html

- Improved LiDAR and radar to better match the shape of the vehicles

- Added support for additional TraCI clients in Sumo co-simulation

- Added script example to synchronize the gathering of sensor data in client

- Added default values and a warning message for lanes missing the width parameter in OpenDRIVE

- Added parameter to enable/disable pedestrian navigation in standalone mode

- Improved mesh partition in standalone mode

- Added Renderdoc plugin to the Unreal project

- Added configurable noise to LiDAR sensor

- Replace deprecated

platform.dist()with recommendeddistro.linux_distribution() - Improved the performance of capture sensors

Art

- Add new Sky atmosphere

- New colliders for all vehicles and pedestrian

- New Physics for all vehicles and pedestrian

- Improve the center of mass for each vehicle

- New tags and fix Semantic Segmentation

- Add real values for ilumination

- Add new pedestrian

- Set exposure mode as autoexposure Histogram as default for all rgb cameras

Fixes

- Fixed the center of mass for vehicles

- Fixed a number of OpenDRIVE parsing bugs

- Fixed vehicles’ bounding boxes, now they are automatic

- Fixed a map change error when Traffic Manager is in synchronous mode

- Fixes add entry issue for applying parameters more than once in Traffic Manager

- Fixes std::numeric_limits

::epsilon error in Traffic Manager - Fixed memory leak on

manual_control.pyscripts (sensor listening was not stopped before destroying) - Fixed a bug in

spawn_npc_sumo.pyscript computing not allowed routes for a given vehicle class - Fixed a bug where

get_traffic_light()would always returnNone - Fixed recorder determinism problems

- Fixed several untagged and mistagged objects

- Fixed rain drop spawn issues when spawning camera sensors

- Fixed semantic tags in the asset import pipeline

- Fixed

Update.shfrom failing when the root folder contains a space on it - Fixed dynamic meshes not moving to the initial position when replaying

- Fixed colors of lane markings when importing a map, they were reversed (white and yellow)

- Fixed missing include directive in file

WheelPhysicsControl.h - Fixed gravity measurement bug from IMU sensor

- Fixed LiDAR’s point cloud reference frame

- Fixed light intensity and camera parameters to match

- Fixed and improved auto-exposure camera (

histogramexposure mode) - Fixed delay in the TCP communication from server to the client in synchronous mode for Linux

- Fixed large RAM usage when loading polynomial geometry from OpenDRIVE

- Fixed collision issues when

debug.draw_line()is called - Fixed gyroscope sensor to properly give angular velocity readings in the local frame

- Fixed minor typo in the introduction section of the documentation

- Fixed a bug at the local planner when changing the route, causing it to maintain the first part of the previous one. This was only relevant when using very large buffer sizes

CARLA AD Leaderboard Announcement

Find out more in the official site!